One of the challenges of dealing with Big Data is to ensure data consistency across data sources. When working with Big Data, most platforms would likely be working across multiple databases, and, as anyone dealing with replication across data sources knows, there are several challenges involved in the replication. Consequently, verifying consistency is very important.

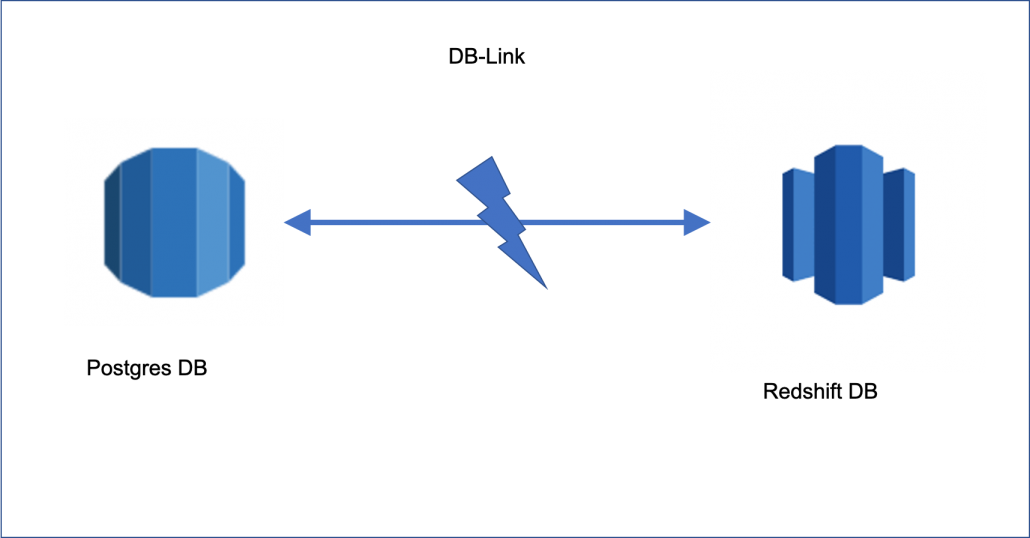

We at Intentwise have a use case where we replicate data from Postgres to Redshift to run complex analytical queries on Redshift. This replication happens multiple times a day and it is thus very important to maintain consistency between databases.

How do we verify data is consistent?

There are many ways in which data consistency can be verified — we use dblink between Postgres and Redshift to ensure data consistency.

Setup dblink in Postgres

Connect to Postgres and run the following SQL code, replacing the <placeholders> with the values from your own instances:

CREATE EXTENSION postgres_fdw; CREATE EXTENSION dblink; CREATE SERVER foreign_server FOREIGN DATA WRAPPER postgres_fdw OPTIONS (host '', port '', dbname '', sslmode 'require'); CREATE USER MAPPING FOR SERVER foreign_server OPTIONS (user '', password '');

You can also refer to dblink in PostgresSQL documentation.

Once the dblink is set up, you will be able to query Redshift tables from Postgres.

Verify data between databases

Consider the two example tables below:

Postgres Campaign table

| name | campaign_id | budget | status |

| Toy | 12345678 | 500 | enabled |

| Toys for 6 | 67779701 | 300 | paused |

| Toys for 8 | 12312355 | 100 | archived |

Redshift Campaign table

| name | campaign_id | budget | status |

| Toy | 12345678 | 500 | enabled |

| Toys for 6 | 67779701 | 300 | paused |

If you compare the two campaign tables above, you would see that Campaign Row “Toys for 8” is missing in Redshift.

How do we verify this with dblink?

We have written a program in Java and deployed it as AWS Serverless Lambda functions. Serverless lambdas are a great way to deploy standalone programs like these. Lambdas can be invoked with a scheduler like CloudWatch or even by posting an event; ‘N’ number of Lambda can run in parallel.

Query to verify the discrepancy

There are many ways the data between two tables can be verified. One of the ways is to use except operator as shown below:

select

name,

campaign_id,

budget,

status

from

campaign_postgres except all

select

name,

campaign_id,

budget,

status

from

dblink('foreign_server', $ REDSHIFT $

select

name, campaign_id, budget, status

from

campaign_redshift $ REDSHIFT $ ) as T(name VARCHAR, campaign_id BIGINT, budget decimal, state VARCHAR)

In the above query

- The dblink function accepts the server connection (‘foreign_server’) that was created in the previous step.

- The SQL query is passed in as a string between double dollar quotes ($REDSHIFT$). This piece of the query will be executed in Redshift and the data would be returned back to Postgres.

- When using dblink with Redshift we need to define the datatypes of Resultset which is defined after T in the above query.

The above query would return the following result:

| name | campaign_id | budget | status |

| Toys for 8 | 12312355 | 100 | archived |

This tells us that “Toys for 8” exists in Postgres but not in Redshift. If even one of the values which is being compared is different we would be able to surface that with the above query.

Conclusion

Dblink is a good way to verify consistency between different databases. Everything around Intentwise runs around data and our customers rely on data for doing analysis and taking important decisions, so we at Intentwise take any data discrepancy seriously and this is one of the many things we do to ensure data consistency.

References

AWS blog on using dblink

Intentwise’s post on database migration using DMS reference

Read our post “Database Connection Tools” for a list of the best tools to connect to a database.